You can find the course content for End to End Data Science Practicum with Knime in Udemy. You can also get registered to the course with below discount coupon:

https://www.udemy.com/datascience-knime/?couponCode=KNIME_DATASCIENCE

- Introduction to Course

- What is this course about?

- How are we going to cover the content?

- Which tool are we going to use?

- What is unique about the course?

- What is Data Science Project and our methodology

- Project Management Techniques

- KDD

- CRISP-DM

- Working environment and Knime

- Installation of Knime

- Versioning of Knime

- Resources (Documentation, Forums and extra resources)

- Welcome Screen and working environment of Knime

- Welcome to Data Science

- Understanding of a workflow

- First end-to-end problem: Teaching to the machine

- First end-to-end workflow in Knime

- Understanding Problem

- Types of analytics

- Descriptive Analytics and some classical problems

- Predictive Analytics

- Prescriptive Analytics

- Understanding Data

- File Types

- Coloring Data

- Scatter Matrix

- Visualization and Histograms

- Data Preprocessing (Excel Files : cscon_gender , cscon_age)

- Row Filtering

- Rule Based Row Filtering

- Column Filtering

- Group by , Aggregate

- Join and Concatenation

- Missing Values and Imputation

- Date and Time operations

- Example 1

- Feature Engineering

- Encoding: One – To – Many

- Rule Engine

- Imbalanced Data: SubSampling, SMOTE

- Models

- Introduction to Machine Learning : Test and Train Datasets

- Introduction to Machine Learning: Problem Types

- Classification Problems (Excel Files : cscon_gender )

- Naive Bayes and Bayes Theorem

- Binning and Naive Bayes practicum (click to download the workflow)

- Decision Tree

- Decision Tree Practicum (. Click here to download the workflow )

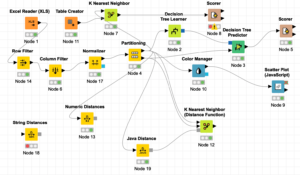

- K-Nearest Neighborhood

- KNN Practicum (click to download the workflow)

- Distance Metrics of KNN

- Distance Metrics Practicum (click here to download the knime workflow)

- Support Vector Machines

- Kernel Trick and SVM Kernels

- SVM Practicum

- End to End Practicum for Classification

- Extra: Logistic Regression

- Extra: Logistic Regression Practicum

- ARM Problems

- Clustering Problems

- Regression Problems

- Knime as a tool : Some Advanced Operations

- Evaluation

- Introduction to Evaluation

- ZeroR Algorithm, Imbalanced Data Set and Baseline

- k-fold Cross Validation

- Confusion Matrix, Precision, Recall, Sensitivity, Specificity

- Evaluation of clustering: purity , randindex

- Evaluation of prediction: rmse, rmae, mse, mae

- Evaluation Practicum with knime: Example 3

- Evaluation of ARM

- Introduction to Evaluation

- Reporting

- Exporting Reports to Images (Data to Report)

- Connecting Knime with other Languages

- Java Snippet

- R Snippet

- Python Snippet

- Meta Learners

- Ensemble Techniques: Bagging, Boosting and Fusion

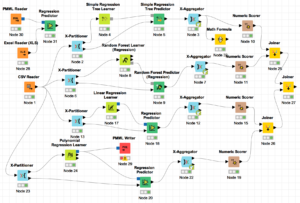

- Random Forest ensemble learning technique for Classification

- Random Forest ensemble learning technique for Regression

- Random Forest Practicum

- Gradient Boosted Tree Regression

- Gradient Boosted Tree Regression Practicum

- Example 4

- Deep Learning

- Introduction to artificial neural networks

- Linearly Separable Problems and beyond

- DL4J Extension

- Real Life Practicums

- Resources about real life applications : Job Search, Forums, Competitions etc.

- Predicting the customer will pay or not

- Predicting the period of payments

- Credit Limit

- Customer Segmentation

- Bonus

- Loading different train and test datasets

- Data Preprocessing Practicum(Click to download knime file)

- Regression Practicum (Simple Linear, Multiple Linear Regression, Correlation Matrix, p-Value and Feature Elimination (backward Elimination, Forward Selection) )Knime Files: File 1, File 2

- Comparing the Regression Models : Decision Tree Regression, Random Forest Regression, Linear Regression, Polynomial Regression (Click to Download the Knime File)

- Evaluation of Regression Models (R2 and adjusted R2 ), Introduction to Classification problems and Logistic Regression

- Logistic Regression and Introduction to Classification (Click to Download the Knime File)

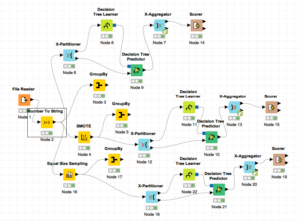

- Time Series Analysis and Classification algorithms : Decision Tree, Random Forest

- Temporal Feature Extraction (Data and Time) (Click to Download Knime File) (sample CSV file: )

- Time Series Analysis and an example of Moving Average: (Click to Download Knime File)

- Titanic Data Set (CSV)

- Classification Example (Click to Download Knime File)

- Imbalanced Datasets

- Unbalanaced Data Sets and Solutions: Under-sampling, SMOTE (Click to Download Knime File)

- External Links

- Customer Segmentation and Python ( Click to Download Knime File , Click to Download Dataset)

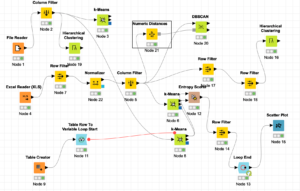

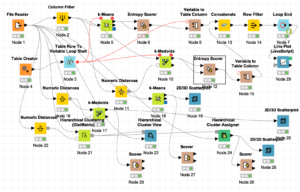

- Comparison of Clustering Algorithms: K-Means, K-Medoids ve Hierarchical Clustering (HC) and finding optimum Number of clusters with WCSS for K-Means ( cluster distance functions min, Max, group average, center, ward’s method) : Click to download knime file

- Steps for above workflow

- 1. Load Iris dataset

1.1. Filter the class column

2. For K-Means and K-Medoids

2.1. Find the best cluster number

2.2. Cluster with K-means and K-medoids

3. Find the best K value for Hierarchical Clustering(HC)

4. Cluster with HC

5. Cluster with K=3

6. Compare your results with K=3

7. Report the best clustering - Evaluations:HC : 24 Error

KMeans : 17 error

KMedoids : 16 error

- Text Mining.

- Click to download the sample text file

- RSS Workflow (Required Extension: Palladian and Text Processing)

- Text Tagging and Tag Clud: Click to Download Knime Workflow

- Text Classification Click to download Knime Workflow